In the first part of this series, I spoke about the natural inevitability of speech as a primary method for interfacing with technology. I painted a picture of how absurd human interaction would be if limited to the traditional methods available for interacting with technology.

The flip-side of the ridiculous example from part 1 is “normal” human communication. Rich human-to-human interaction is done primarily with voice, and augmented with body language like facial expression and gestures. (The only way to go deeper into rich human interaction is to begin to consider shared experience and culture, which is way beyond the scope of current AI. I’ll leave this to the far-futurists like Ray Kurzweil… maybe a book,“The Age of Culturally-Aware Machines?”)

In part 2, we looked at some reasons why many users remain lukewarm about the promise of voice command- specifically the past 25 years of voice applications that have not lived up to promises… or even basic standards of usability for that matter.

I ended the previous post with a promise to tell you what has changed after 25 years of mediocre voice command, and I have provided a list below. Some of these changes have been gradual, and others more abrupt. Taken together, they all create the capabilities needed for voice interface to finally function well enough to attract mainstream adoption.

Before the list, here’s an overview of what’s happening:

Have you noticed, when you ask Alexa something and she doesn’t have a clear answer, sometimes she takes a shot at it and asks, “did that answer your question?”

This is real-time data collection…. millions of voice users providing feedback about whether “it’s working.”

This means every time you use your voice to interact with Alexa, Siri, Google, or any voice service, you are providing valuable feedback to the machine learning algorithm and thereby helping the system work better. Which leads me to…

Voice-Enabling Technology change #1:

smaller/cheaper personal devices, widespread adoption = big data

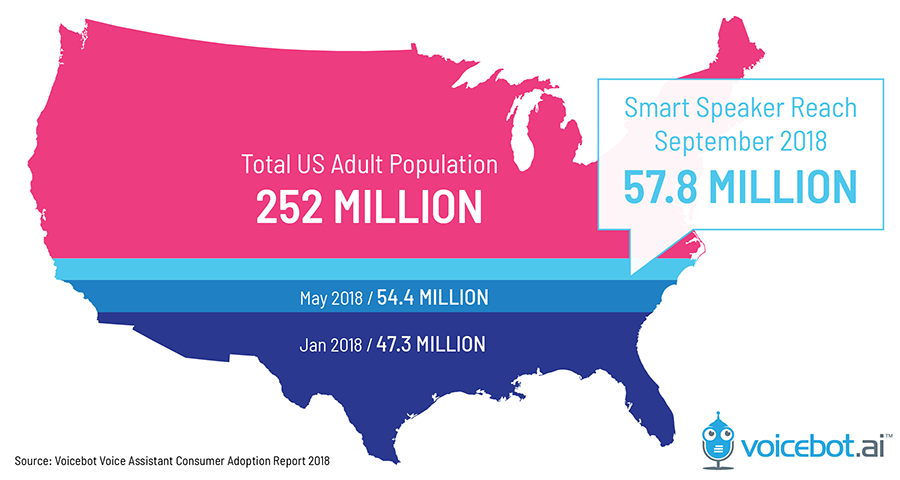

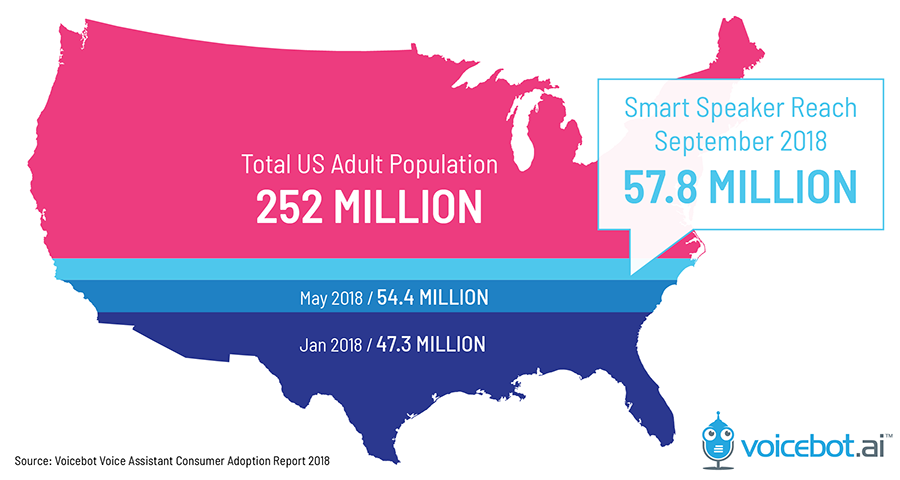

More users, to the tune of more than 1 in 5 US adults, are experimenting with voice interaction thanks to the availability of apps like Alexa, Siri, and Google Assistant on smart phones and the availability of low cost smart speakers. The result is, big players like Google, Amazon, Apple, and Microsoft have a whole lot of data available to feed machine learning.

Voice-Enabling Technology change #2:

CPU power

More CPU power is available per hardware unit to run the machine learning algorithms, analyze nuanced speech, including CONTEXT, and make probabilistic predictions rather than attempt to match against a limited dictionary of fixed patterns.

Voice-Enabling Technology change #3:

cloud computing

Not only do we have the continual increase in power per CPU as predicted by Moore’s Law. But also we have the parallel computing power and cloud infrastructure of big players like Google, Amazon, Apple, and Microsoft.

Voice-Enabling Technology change #4:

machine learning

All that data mentioned in #1 gets fed into machine learning algorithms. Gone are the days of manually modeling how speech should be recognized. Now all that’s required is setting the parameters and feeding the data into the machine learning system so IT builds the model.

In summary, widespread use of voice assistant apps has resulted in there being loads of data that we finally have enough processing power to feed into machine learning algorithms to make the voice recognition better.

Inevitability of voice user interface

The inevitability of natural voice interaction with technology is one thing that has NOT changed. Humans want to communicate naturally. Everything else, from keyboards to touch screens has been a compromise to allow us access to the power of technology.

This power and inevitability is an open secret, known not just to futurists but anyone with a technology roadmap that approaches the horizon. This power and inevitability is the very reason we have had 25 years of false starts- smart companies are desperate to be in the market when it finally takes off!

And it is taking off now.

What can you do to be positioned to best serve your market and not be left behind by competitors as voice adoption settles into mainstream use?

To see the future, we must look to the past. If you could go back to 1996, how would you want to position yourself online?

In the next part of this series, I will compare the current state of voice search to the WWW of 1996, and suggest some ways to “stake your claim” to your brand and key phrases.

TO BE CONTINUED

Voice Command Has Arrived… Again. For the Last Time Part 3 was first published to Medium.